The Computer Revolution: From Mechanical Tools to Smart Machines

The computer is one of the most transformative inventions in human history. From simple counting devices to powerful machines capable of artificial intelligence, computers have reshaped how humans work, communicate, learn, and live.

The evolution of computers did not happen overnight; it is the result of thousands of years of human effort to simplify calculation, process information, and automate tasks.

This journey can be divided into several major phases—starting from early calculating tools, progressing through mechanical and electro-mechanical machines, and finally arriving at modern digital computers. Each stage reflects technological innovation driven by scientific discovery, social needs, and industrial advancement.

Early Tools of Calculation (Before 1600)

Abacus (c. 3000 BCE)

The abacus is considered the earliest known calculating device. It was widely used in ancient civilizations such as Mesopotamia, China, Egypt, and Greece. Though simple, it allowed users to perform arithmetic operations like addition, subtraction, multiplication, and division.

Tally Marks and Counting Systems

Early humans used stones, sticks, bones, and tally marks to count goods, livestock, and time. These primitive methods laid the foundation for numerical thinking.

Importance of This Era

- Introduced the concept of systematic calculation

- Helped develop numerical systems

- No automation, entirely human-operated

Mechanical Computing Devices (1600–1800)

Napier’s Bones (1617)

Invented by John Napier, these rods made multiplication and division easier. They were based on logarithmic principles.

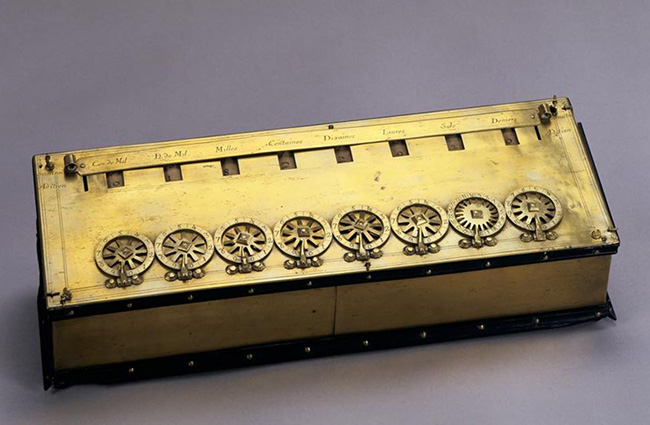

Pascaline (1642)

Blaise Pascal, a French mathematician, invented the Pascaline, the first mechanical calculator. It could perform addition and subtraction using gears and wheels.

Leibniz’s Stepped Reckoner (1673)

Developed by Gottfried Wilhelm Leibniz, it improved upon Pascal’s design and could perform multiplication and division more efficiently.

Key Features

- Mechanical gears and levers

- Limited accuracy and speed

- Required human operation

Conceptual Foundations of Modern Computers (1800–1900)

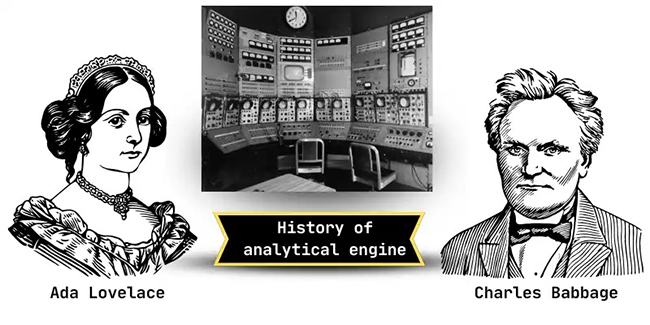

Charles Babbage – The “Father of Computer”

Charles Babbage designed two revolutionary machines:

Difference Engine (1822)

- Designed to compute mathematical tables

- Never fully completed

Analytical Engine (1837)

- First concept of a general-purpose computer

- Included:

- Input (punched cards)

- Processor (mill)

- Memory (store)

- Output

Ada Lovelace – First Computer Programmer

Ada Lovelace wrote algorithms for the Analytical Engine, making her the world’s first programmer.

Significance

- Introduced core computer concepts

- Programming ideas emerged

- No electricity used

Electro-Mechanical Computers (1900–1940)

Tabulating Machine (1890)

Invented by Herman Hollerith, used for the U.S. Census. It processed data using punched cards and electricity.

Harvard Mark I (1944)

- Designed by Howard Aiken

- Used electromechanical relays

- Very large and slow by today’s standards

Limitations

- Large size

- High maintenance

- Slower processing speed

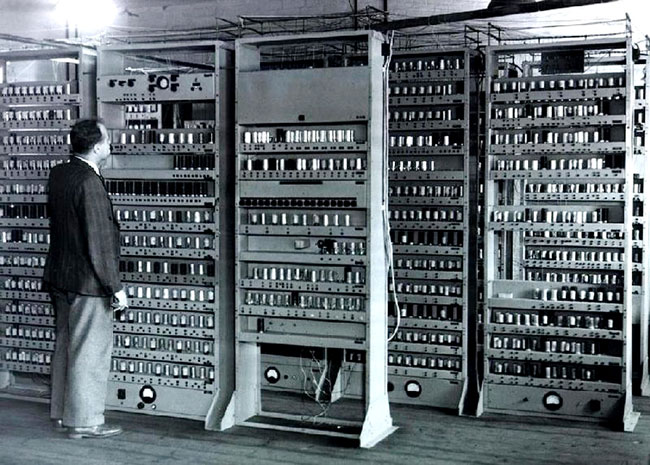

First Generation Computers (1940–1956)

Vacuum Tube Computers

Key Examples

- ENIAC (1946)

- EDVAC

- UNIVAC I

Characteristics

- Used vacuum tubes

- Consumed massive power

- Generated excessive heat

- Occupied entire rooms

- Programming done in machine language

Achievements

- High-speed calculations

- Used in military and scientific research

Second Generation Computers (1956–1963)

Transistor-Based Computers

Major Breakthrough

The invention of the transistor replaced vacuum tubes.

Advantages

- Smaller size

- Lower power consumption

- More reliable

- Less heat production

Programming Languages

- Assembly Language

- FORTRAN

- COBOL

Usage

- Scientific research

- Business data processing

Third Generation Computers (1964–1971)

Integrated Circuits (ICs)

What Changed?

Integrated Circuits combined multiple transistors on a single silicon chip.

Features

- Faster processing

- Smaller and cheaper

- Increased reliability

- Operating systems introduced

Examples

- IBM System/360

- Honeywell 6000 series

Impact

- Computers became commercially viable

- Multi-programming possible

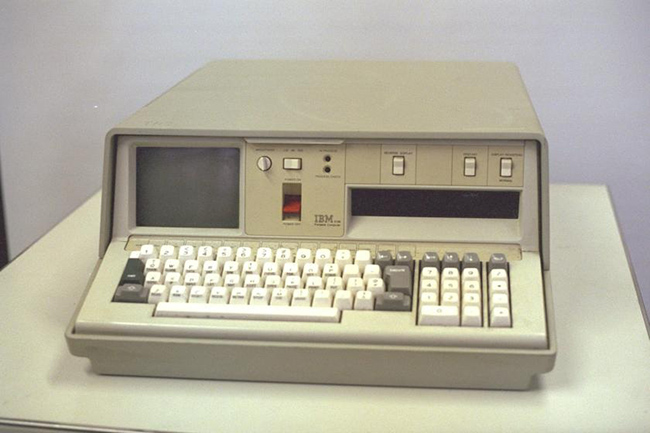

Fourth Generation Computers (1971–Present)

Microprocessors and Personal Computers

Microprocessor Revolution

In 1971, Intel 4004 became the first microprocessor.

Key Developments

- Entire CPU on a single chip

- Birth of Personal Computers (PCs)

Popular Systems

- Apple I & II

- IBM PC

- Microsoft Windows

- Macintosh

Features

- Graphical User Interface (GUI)

- Mouse and keyboard input

- Networking and internet

Impact

- Computers entered homes

- Education, business, and entertainment transformed

Fifth Generation Computers (Present & Future)

Artificial Intelligence and Beyond

Focus Areas

- Artificial Intelligence (AI)

- Machine Learning

- Quantum Computing

- Robotics

- Natural Language Processing

Characteristics

- Self-learning systems

- Voice recognition

- Image processing

- Decision-making abilities

Examples

- Supercomputers

- AI assistants

- Autonomous vehicles

- Quantum computers (experimental)

Evolution of Computer Size and Power

| Era | Size | Speed | Power |

|---|---|---|---|

| First Generation | Room-sized | Slow | Very high |

| Second Generation | Cabinet | Faster | Lower |

| Third Generation | Smaller | Faster | Efficient |

| Fourth Generation | Desktop/Laptop | Very fast | Very efficient |

| Fifth Generation | Nano/Cloud-based | Extremely fast | Optimized |

The evolution of computers is a remarkable story of human innovation and intellectual progress. From simple counting tools like the abacus to intelligent machines capable of learning and decision-making, computers have continuously transformed the way humans solve problems and manage information. Each stage of development—mechanical, electro-mechanical, and digital—introduced breakthroughs that increased speed, accuracy, and efficiency.

Today, computers are deeply integrated into every aspect of modern life, including education, science, business, healthcare, and communication. With the rise of artificial intelligence, quantum computing, and advanced automation, the future of computers promises even greater possibilities. Understanding the evolution of computers not only highlights past achievements but also helps us appreciate the technological foundations shaping the world of tomorrow.

Sources

Oxford Dictionary of Computing

IBM Archives – History of Computers

Computer History Museum – Mountain View, California

Encyclopaedia Britannica – Computer History & Generations

National Museum of American History (Smithsonian) – Computing Artifacts

[…] The Computer Revolution: From Mechanical Tools to Smart Machines […]